The story of the last decade of professional learning has been around the idea of “evidence for practice.” With ESSA’s definition of professional learning, it’s clear that programs have to be linked to research that shows impact on students.

But choosing a research-backed framework is the easy part. Translating it into practice — also known as “implementing with fidelity” — is a taller order.

So what does “implement with fidelity” actually mean? It sounds so easy when you put it on the project map. But one list item doesn’t cover delivering a program exactly as intended by the authors while accounting for the unique circumstances, schedules and demands of your district.

Typically when we’re talking about fidelity, we’re talking about the implementation drivers that lead to fidelity. These drivers tend to fall into three key areas: leadership vision, organizational alignment to execute that vision at scale, and individual staff’s competency to enact it in the classroom.

Today: Part 1 of a conversation with two real-life practitioners on how to optimize these drivers in a school district setting, track implementation success in real time, and understand what works and why.

(Quotes have been lightly edited and condensed for clarity. See the whole conversation at kickup.co/midyearchecks!)

Kibler:

Youngstown is a district that had been in a constant struggle of achievement for over a decade. And with that comes that kind of constant turnover — every three to five years, a new leader that has a new program to do.

So basically, we’ve had “okay, we’re going to do this: we’ll give you a piece of professional development, we’ll do it, and we’ll wait to see if it works.” And really, for us, time and time again it wasn’t enough. Going beyond “here’s some professional development and we expect you to go do it the way we taught you the first time” isn’t realistic. We really need to monitor what’s going on in our classrooms. For us, it was the Gradual Release of Responsibility (GRR), and a good set of foundational practices.

Just like students, when teachers learn something new, they don’t always get it perfect the first time. It’s important to give feedback on what they’re doing right or maybe incorrectly so [the training] can yield the outcome that we want. So it goes back to definition — if we’re not doing it the way it’s intended, we’re just not going to see the results. And I think that over time, we saw a lot of that: things were being implemented, but not with fidelity.

And so when we put the GRR in place, we wrote it out one section at a time, followed up with monitoring and coaching over an eight-week period before we went on to the next one. We wanted to be able to say “Hey, you’re doing this part right,” before giving [teachers] a wide-spread framework.

Swartz:

In the state of Iowa, our literacy achievement hasn’t really progressed the way we wanted it, and we knew we needed to help that support work with our schools. We’ve felt like we’ve provided really good professional learning for teachers, but had been doing a “go forth and see what happens” model.

We decided that we really had to be in this together and focus on followup support. After the professional development, what can we do to really be there working hand-in-hand with teachers to make sure that it’s getting implemented the way it is expected to? So we’ve tried to become more partners in that process, both in looking at implementation and giving them time to practice because yeah, it can be clunky at first.

Kibler:

“Clunky” is almost too nice of a word – it’s messy! You really have to be in there with [teachers] and give them support, because I think that’s part of showing that you’re willing to work with them and avoid that [impression] of monitoring as a punitive thing, instead of a coaching thing.

Swartz:

We don’t want to be seen as the [Area Education Agency] coming in and doing this to teachers. We’re working on this together. We’ve really tried to incorporate with the coaches in the building, so that they feel like they’re part of this, and they’re a part of the observation of implementation as well as the administrators.

That’s the other thing that we wanted: we all know that leadership is important in these schools. How do we help administrators to increase their [own] knowledge about what it should look like as well? So we’re really trying to be a partner with all those different roles within the schools.

Swartz, cont’d:

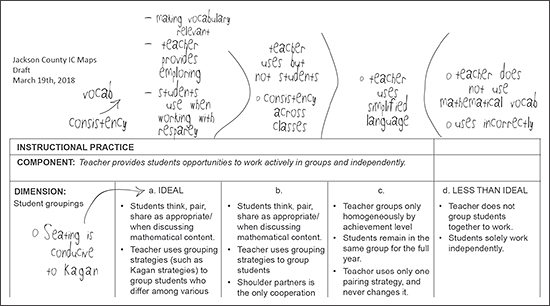

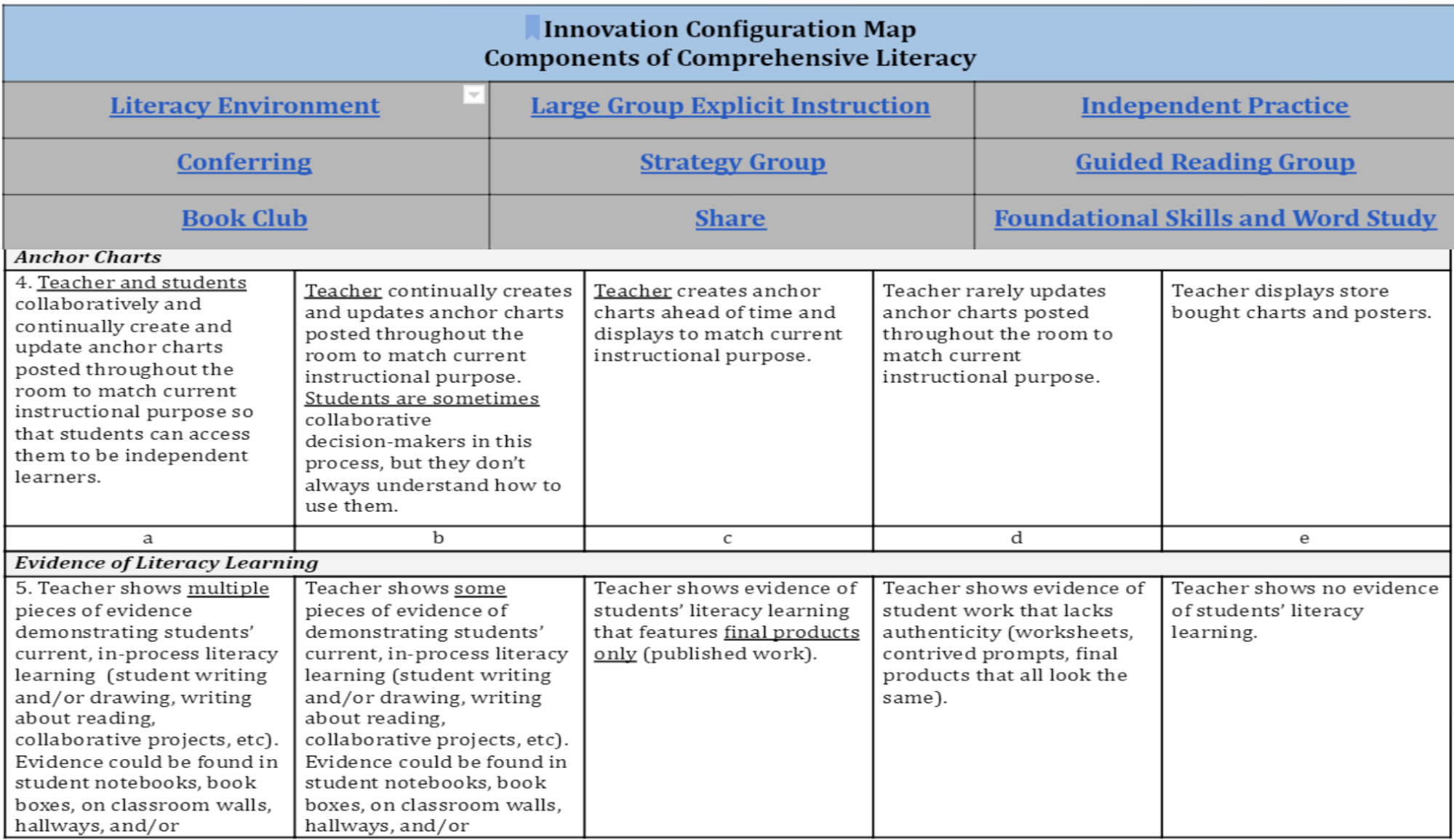

An innovation configuration map helps paint the picture of what the innovation should actually look like to you if you were implementing it with fidelity. We use the current research on what best practice should be and put it into this [format], so what columns A and B actually show is “If you’re doing it well, this is what it will look like.” We want people over in A, but if they’re in column B we know we’re getting them where we need to be.

Swartz, cont’d:

The additional columns are more of what we call non-examples. What this does for teachers is lets them see “Where am I at in this process?” It’s not about “I gotcha,” it’s about “Where are you, and how can you move to the next step to get to where you need to be?”

We use the innovation configuration map both when we go in and do observations [of] where we feel teachers are, and teachers also use it their own self-reflection. We compare those two as we do observations, and then we can have those conversations with individual teachers about whether we’re on the same page or are we not, and what do we need to do to get there.

One of the interesting things is early on when teachers self reflect, what we notice is they mark themselves closer on the left-hand side than the right-hand one. If we do this a couple of times during the year, often what we see is the second time it looks like they’ve regressed but it’s not really that they’ve regressed — it’s just they didn’t know what they didn’t know at the beginning. Now they’re like, “You know what, I really am NOT where I thought I was.” So this framework really helps us look at patterns and trends.

KickUp note: That dip is a common phenomenon in self-assessments. You might expect a perfect linear line going upward, but an initial dip is actually great sign that they’re they’re getting it. They’re continuing to gain knowledge and skills at assessing their own knowledge and skills.

Kibler:

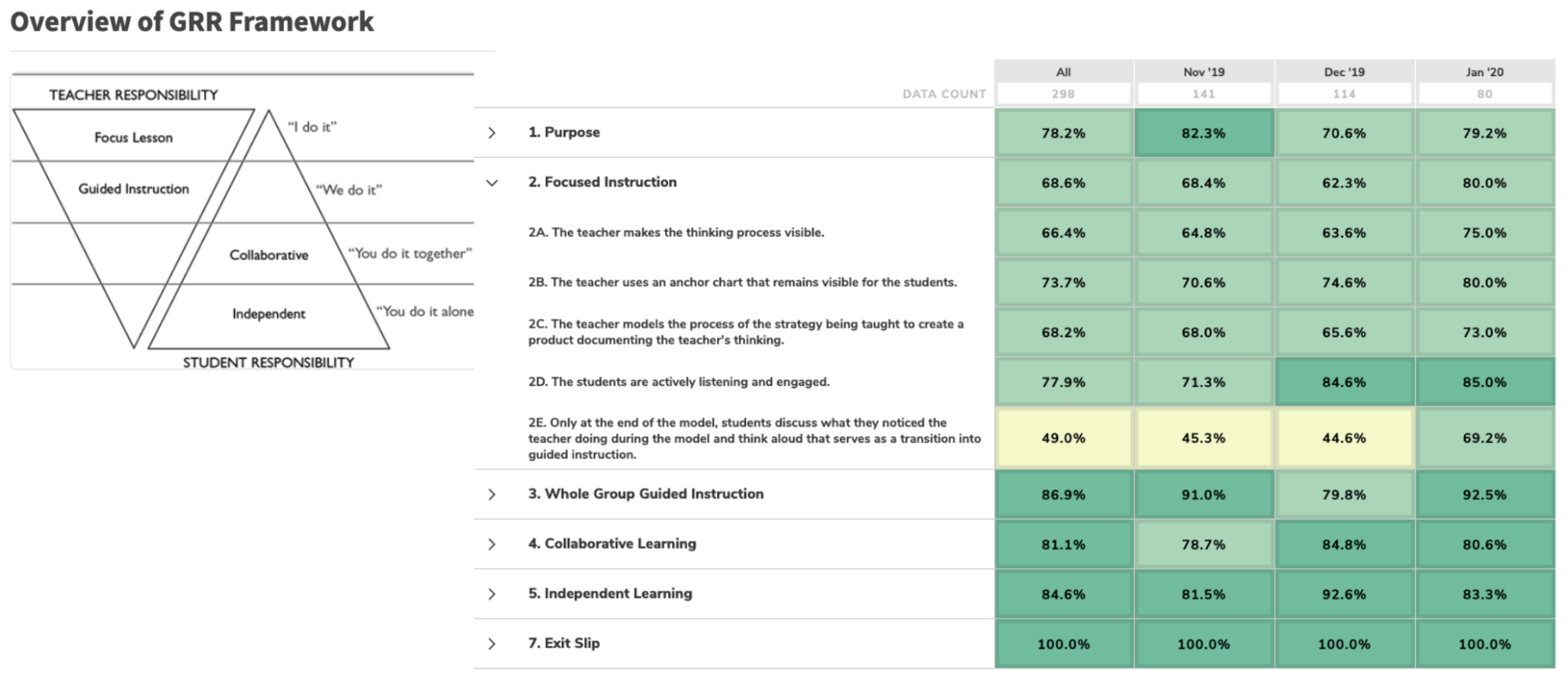

The [Gradual Release of Responsibility], simply put, is a framework of “you know, I do, we do, and then you do.” I, as the teacher, am going to show you what I want you to do or learn or master. Then we do it together, then you do it — maybe with a classmate or maybe on your own, depending on the scale or lesson.

That’s the framework in a nutshell, but we broke apart each one of those segments and developed them [into PD] over time and put them back together. As you can see, there’s seven different sections:

Kibler, cont’d:

Administrators and coaches that are going into a classroom, you know they aren’t necessarily going in at the same time every time, so we wanted to make sure that wherever they were they could make some feedback on the particular section that observing.

Kibler, cont’d:

So within [the GRR] we built in a piece called a “bite-size action step” that the teacher would receive which was, from the observer’s point of view, “what’s the one change that I make between this lesson and the next lesson that’s going to help my students learn better?” Maybe that’s just as simple as taking an anchor chart and moving it from an area that’s far away to an area that’s easier for students to access, or a different way of assessing the students or pushing their rigor. We’re trying to make that feedback network a little bit easier for our coaches and principals to the teacher, so that they’re on the same page and able to do more coaching-sized bits rather than looking at it as an evaluation.

We took the GRR framework and applied it across our K-12 schools and allowed principals to focus in on the areas that they felt their teachers really needed.

This is just the first part of our summary of Kim and Greg’s conversation — click here for part 2 or watch the full video here!

Schedule a demo with one of our friendly team members.