Professional development feedback gets a bad rap. District leaders and PD directors often consider it subjective and shallow, focusing on verifiable metrics instead: hours clocked, attendances logged and sessions completed.

But participant feedback can be an untapped treasure trove of actionable data, and innovative PD leaders are using it to formatively evaluate programs for in-the-moment success.

We recently talked to the directors of two Texas districts about the ways they’re listening to — and using — post-PD feedback.

Couldn’t join us this time? Watch the session on YouTube, or read on for the key takeaways, tips and insights:

Comments have been lightly edited and condensed for clarity.

Anna Jackson: One of the things that we’ve wrestled with is just making sure that people had trust enough to be able to give us quality feedback… They worry, “Oh is this going to come back on me if I make a negative comment? Is that somehow a negative reflection on me?” And so initially we really had to work hard to earn their trust in order to get sufficient responses, and be very clear that the data that we’re collecting was anonymous data.

We had to make a decision: is it more important to us to have very little data and know exactly who it is? (And it’s probably going to be the people who are really happy and really mad.) Or is it more important to us to have better overall data with a little less ability to filter, but be able to be more strategic in our planning and in our followup?

Dr. Glenda Horner: We updated one of our surveys because we thought we were asking too many questions that would allow us to pinpoint the person on that campus… We want our folks to trust us. We want them to be able to give us honest answers and not think “Ooh, if I answered that demographic question, they may be able to pinpoint me and know that I’m the one who had something less than positive to say,” or whatever. So it’s really important in that trust cycle.

KickUp: To compromise and to find that middle ground can be as simple as putting very clear language on your surveys that explains what those questions are being used for. There are ways on the back end to ask for their email address, but only for the purposes of tying them to those demographic attributes — like what grade level are they in, what building, what content subjects, so that you can see those high-level trends, but you’re not connecting it with names. And it does mean that you may not be able to follow up with individual people for support, but it gives you that higher level context. It does require building trust — but even just talking about it openly and including that kind of messaging on the survey can go a long way in moving towards that trust level.

Anna Jackson: When we make a change, we label it and say “Based on what you said in previous surveys, we are making this change.” We do that so that they’re always making that connection, that “We’re listening, we’re listening, we want to hear you, we want to respond to you.” Because we work for them. They don’t work for us. We’ve got to make sure that what we’re designing is what is meeting their needs, because they’re our customer.

Anna Jackson: So in addition to the things that we’re doing with our surveys, we have begun using KickUp to keep our coaching logs as well. Every time our coaches go onto a campus, they’re able to keep the time there, who they saw, what kind of responses and support they provided. They’re able to connect it to our coaching cycles so that we can see what kind of support is being provided and for how long, which helps us target trends over time… and give feedback to principals on what we’re doing on their campuses. It helps us talk to our school board about how we’re using these coaches and how we’re being intentional with the work.

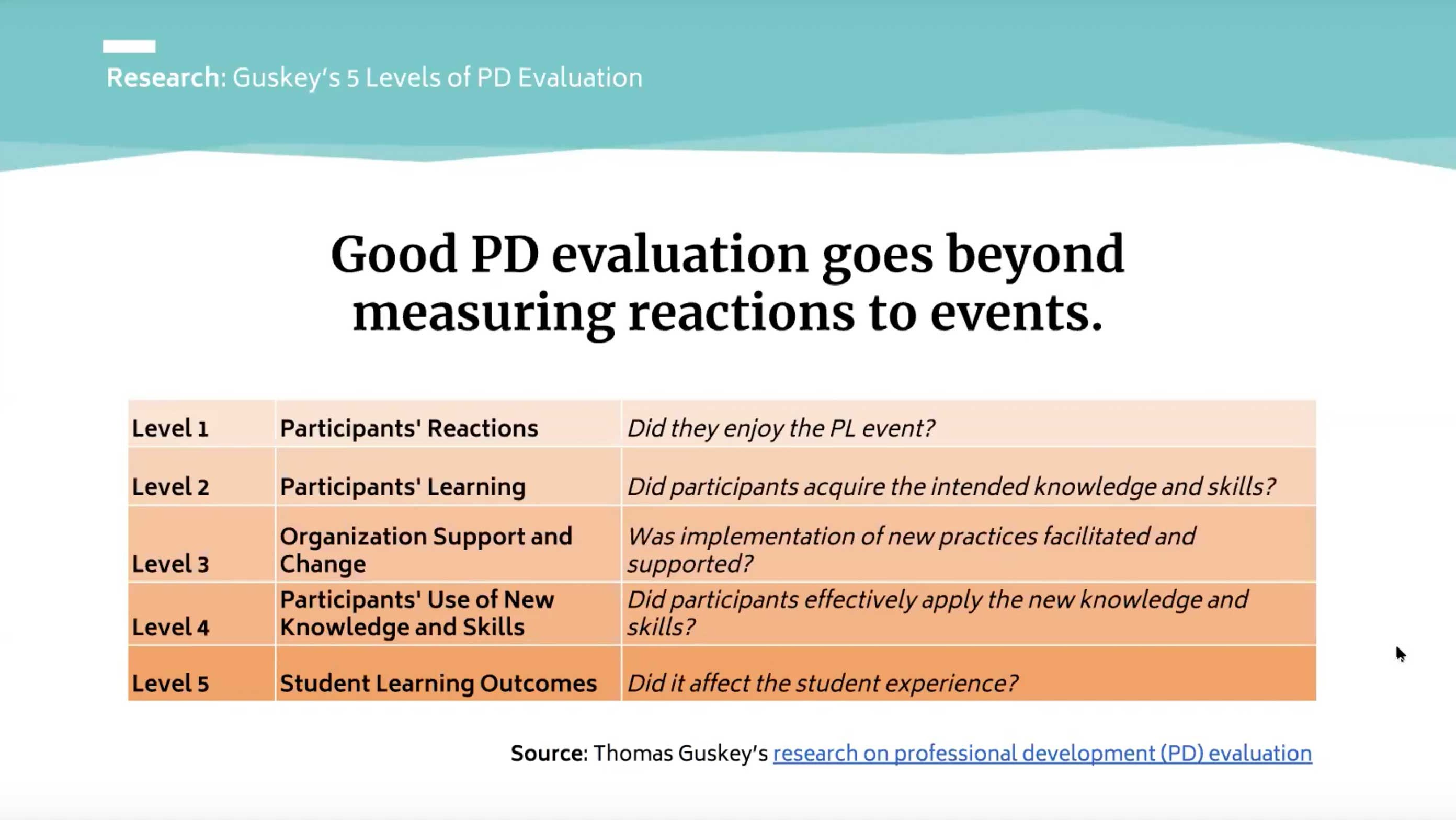

Being able to connect the PD to that coaching piece has been really big for us in terms of just making sure that we’re hitting level three [of Thomas Guskey’s Levels of PD Evaluation], which is about, “What kind of ongoing supports and organizational things do you need in order to be able to make those changes?” So we’ve been able to connect our responses straight to our PD feedback, and provide one more layer of support through our coaches.

Glenda Horner: You really can’t argue with data, right? We’re able to put [the numbers] in front of our board and our superintendent as well as our campus leaders to say, “Hey, what are we doing? Is what we’re doing really mattering?” [Data strategy] just allows us a platform to collect all of that from a longitudinal standpoint.

Schedule a demo with one of our friendly team members.